VitaRun

Machine learning and mobile healthcare

2019 - Group Project with Jonny Midgen, Alex Gourlay, Benjamin Pheipher, Wesley Norbert, Kenza Zouitene

and Luidmila Zudina

VitaRun monitors selected gait features of a runner through force sensors

and an IMU (Inertial Measurement Unit) embedded in an

insole, and provides live actionable feedback during the run.

It also provides insights derived from this data and keep a

history of runs, allowing the user to observe personal trends.

My personal contribution to the project was app development, backend communication and data visualisation.

Background

Running is the most popular sport activity in the UK. However, running injuries affect

1 in 4 amateurs.

Running gait and style is a factor that runners can control to reduce the risk of injury.

Based on research and interviews with a specialist kinesiologist, the following features were selected:

System

During a run, data from the insole sensors is communicated to the VitaRun app via Bluetooth low energy (BLE).

The app sends the data to the server where it is analysed. The results of the analysis and tailored reccomendations

are returned to the app where audio and visual feedback is returned live.

Historical data is also made available to monitor progress.

System Overview.

Hardware

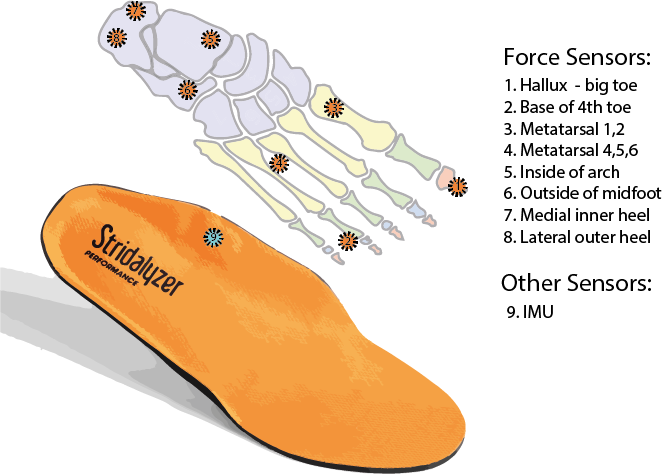

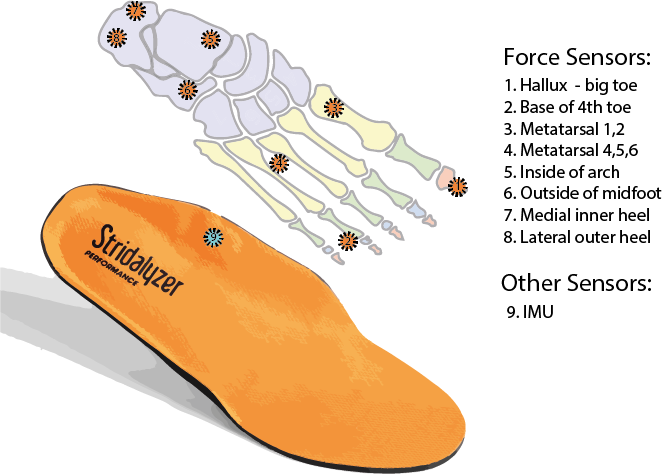

The sensing element of the system was performed by

a pair of ’Stridalyzer’ smart insoles, purchased from the

manufacturer Retisense. Each insole contained

8 pressure sensors and a 6 axis IMU.

Insole sensors

The data from these sensors were streamed to the app using BLE.

App

App Architecture: The app was developed in Android

Studio and constructed in separate modules called

’fragments’ which each provided a core UI function.

This architecture

allowed team members to work individually on components

without editing the same scripts concurrently.

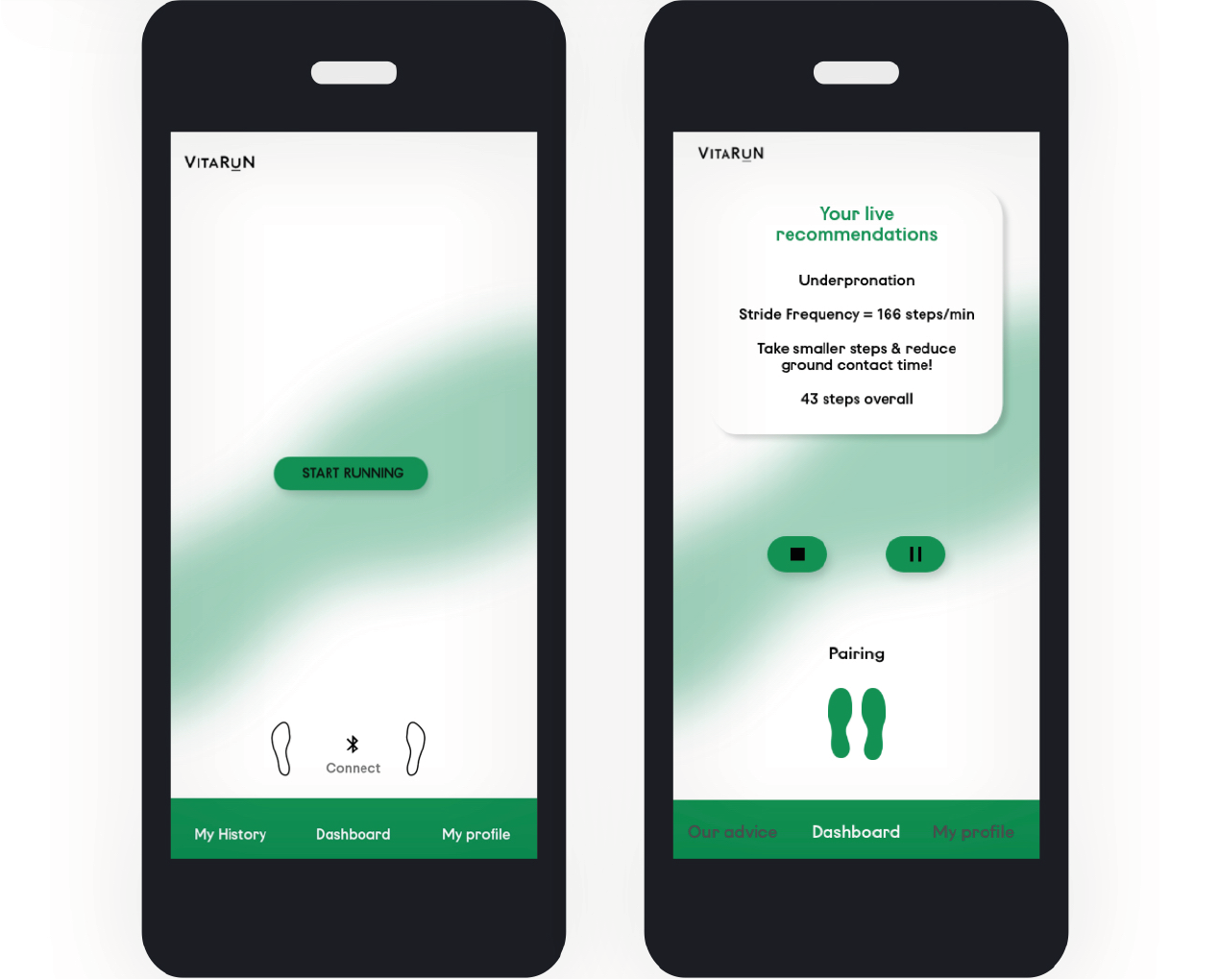

UI Design: The context of use, the intuitiveness and

ease of implementation were considered during the design

of the User Interface (UI). An initial mock up was made

to describe the app flow from a users perspective and to

predetermine the app architecture that was implemented in

Android Studio. The app wireframes were developed using

AdobeXd which allowed for dynamic prototyping.

Feedback: Every 15

seconds, the Recommendations Fragment requests the data

from the server, it then passes the string to a method that

takes the string and updates the VitaRun UI to give live

recommendations to the runner. The audio feedback is a

cut-down version of the method with only the stride length

recommendations being read out every 4 minutes by a textto-

speech converter. The aim is to inform the user during

their run and not distract them. The audio feature transfers

the interesting information to the user without needing them

to stop to get access to the stride length recommendation.

Data Visualisation: Part of the user feedback is a

visualisation of historic runs. This feature is a useful tool

that allows the observation of trends in pronation over

time, monitoring the progression of the condition and the

effectiveness of intervention.

Back End

The back end tasks can be categorised into user profiles

management, signal processing and pronation type classification.

They are performed on the server. The server was written

in Python and communicated with the mobile application

through a RESTful (Representational State Transfer) API.

Each buffer of samples is sent in JSON format from the app

to the server via a POST method. The server then computes

the step frequency of the current buffer, and accumulates a

longer secondary buffer of data which is used for dividing

samples into steps and identifying the pronation type. GET

requests are then used to return data as JSON files to

the application. The server was local, which made it only

accessible from within the same Wi-Fi network.

Signal Processing

Machine learning was used to identify pronation type live using

from the insole data.

Each step of a run is

classified as ”normal”, ”over” or ”under” pronation types.

Classification is performed by an LSTM (Long Short-Term

Memory) RNN (Recurrent Neural Network), trained on data from the insoles.

Step Frequency was calculated using a fast fourier transform (FFT).

Impact Force was calculated directly from the force sensors.

Conclusion

In conclusion, this project has successfully designed a

mobile application to help amateur runners prevent injuries

by giving feedback on their gait and stride frequency. This

feedback is given given before, during and after the run.

The system includes insoles, the android application and the

server.

VitaRun’s future work includes including traditional features

that competitor running applications have, for example

distance travelled and GPS tracking of running path. As well

as making all information inside VitaRun asymmetrically

encrypted. These two features will enable VitaRun to be a

more complete product for all runners.